Research and development on open-source software providing auditory functions for robots Open-Source Robot Audition Software—HARK

Voice is essential for interaction between systems and people. But it is quite difficult for a robot to tell sounds apart with its own ears. HRI-JP has been working in this field ever since its founding. What’s its approach?

Index

Share

HARK can differentiate and understand 11 people speaking at the same time

HARK is an open-source software that has been developed as a collection of research achievements on robot audition. Microphone array processing based on an array of multiple microphones provides a robot with auditory functions. Specifically, HARK includes functions of estimating the locations of speakers and other sound sources (sound source localization), extracting acoustic signals originating from each sound source (sound source separation) and converting speech into text (automatic speech recognition).

While you might think that distinguishing different sounds is a simple activity, it is actually quite difficult for a robot to tell sounds apart with its own ears. It could be more plausible if it were a quiet room, but human activity takes place in a variety of environments. In a bustling place, the problem is that voices and other sounds from the target sound source get mixed in with ambient noise and other sound sources making them less discernable.

Since the robot itself is a cluster of motors, it is also a source of noise. Furthermore, what constitutes the target sound source and what is a noise source can change depending on the situation, so there is also the difficulty of having to constantly keep track of all the sources of sound that it hears.

Instead of the robot using its own ears, one possible workaround would be for the speaker to wear a headset. But putting on a headset every time would be a hassle, and preparing enough headsets in case of more speakers would be problematic. So, for robots to be used in everyday life, they should have the ability to tell sounds apart using their own ears. To resolve these issues, “robot audition” was proposed as a field of research originating in Japan and has been shared with the world. We have continued this research at the global forefront in this field.

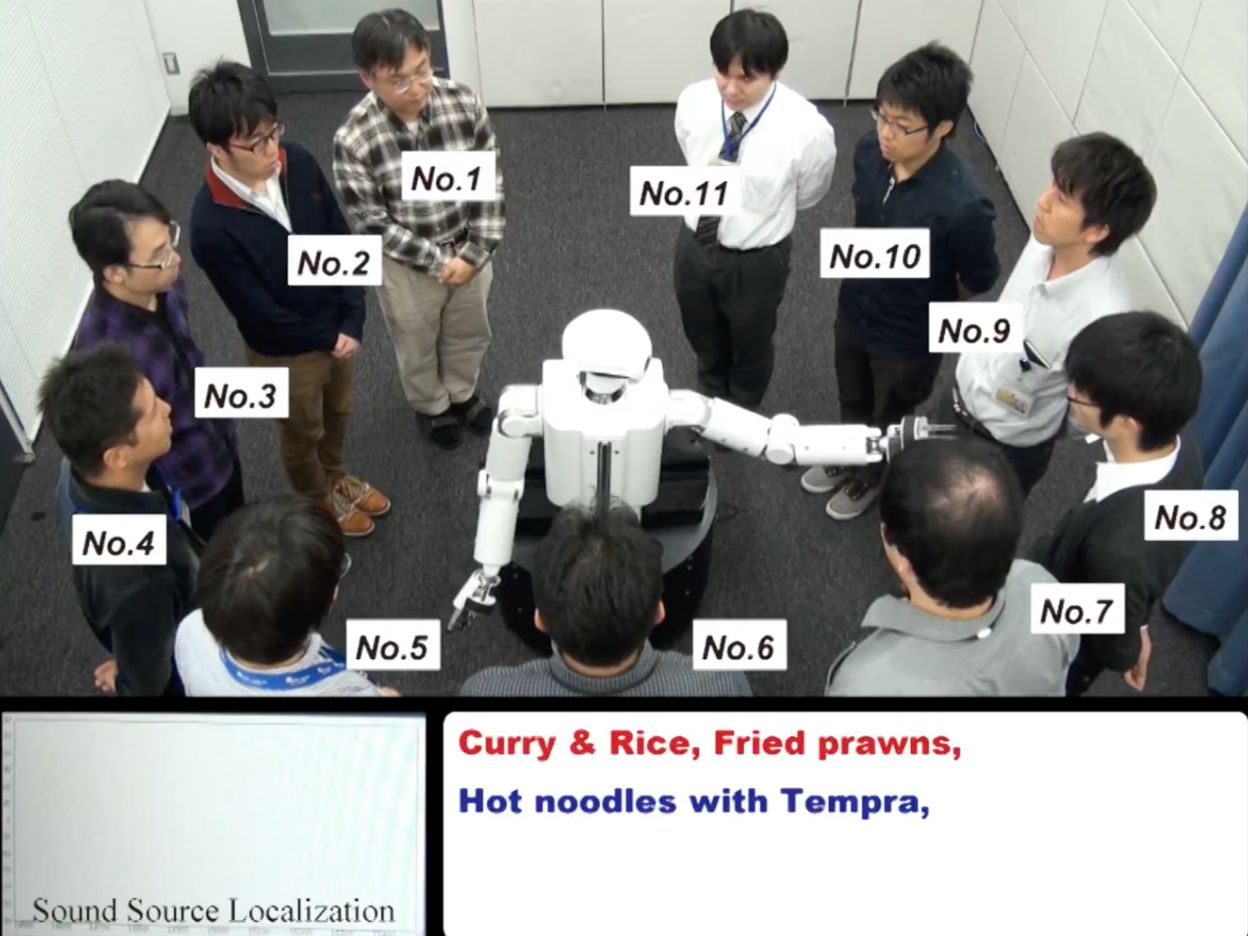

They say, “Seeing is believing,” so let’s look at a video showing the capabilities of HARK. In this video, 11 people order food at the same time. Their voices get all mixed up, and it is impossible to tell what they are saying. But by using 16 microphones mounted on its head, the robot correctly recognizes each of the simultaneous voices.

Prince Shotoku, who appears in the title of the video, was a Japanese politician who lived around the turn of the seventh century. It is said that his hearing was so good he could distinguish several different people speaking at the same time.

In terms of internal processing, HARK first uses a method called Multiple Signal Classification (MUSIC) to distinguish the directions of the 11 speakers. Although the original MUSIC provides noise-robust sound source localization, our MUSIC algorithm has a unique extension to further improve noise robustness. Next, each voice is separated and extracted from the input signal of 11 mixed voices using GHDSS-AS—a unique sound source separation technique based on the source direction information obtained using MUSIC and the criterion that “there is absolutely no high-order correlation between any two sound sources (meaning roughly that any two extracted speeches will be uttered by different speakers and be different in content).” Finally, each separated voice is recognized.

HARK has been downloaded more than 20,000 times a year since becoming open source

HRI-JP has conducted research in this field ever since its founding in 2003. Voices and sounds are one of the most natural interfaces for humans, so auditory functions are considered essential technology for robots and systems to interact with people. Voice also has the advantage that it can be used safely for interaction in busy situations such as when driving a car.

To further accelerate research and disseminate the results to the world, in 2008, HARK was made open source, enabling anyone to freely integrate it into their robots and systems. In the field of computer vision, OpenCV is the de facto standard tool. Our aim is for HARK to have the same presence in the field of audition. In addition to updates of HARK and related software tools, we also offer tutorials every year to promote HARK. HARK is currently downloaded more than 20,000 times a year.

As a result of these ongoing activities, “robot audition” was registered as a field of research with the IEEE Robotics and Automation Society (RAS) in 2014. HARK also played a part in helping this research field originating from Japan gain worldwide recognition.

From discussion analysis to applications in ecology

HARK was originally developed for robots. However, thanks to its outstanding features of real-time sound source localization, sound source separation and automatic speech recognition, application of the technology is now expected to extend beyond robots to various other fields.

The first of these is discussion analysis. By linking speakers and utterances , HARK allows you to extract “who spoke when and for how long,” which means analysis of meetings based on that information is also possible. HARK has already been put to practical use as the basis of discussion analysis services for school education and corporate training.

Surprisingly, HARK is also being used in the field of ecology. Information about when, where and what kinds of birds, animals or insects are making sounds is extremely important. Previously, this information relied on manual effort, and inevitably using the human ear to discern sounds resulted in them being missed or misheard.

People who had relied on their own hearing to make these observations were initially skeptical about the introduction of technologies like HARK. However, as ever more results are published—such as the high correlation with human observations, and the ability to analyze cases that would otherwise be difficult for humans—they are gradually gaining acceptance as useful technologies.

HARK technology has been cultivated by HRI-JP since its early days as it promoted basic research in robot audition. In this sense, HARK is an example of “Innovation through Science,” HRI-JP’s overarching principle. Like the application of HARK to the field of ecology mentioned above, new science begins from such emerging buds of innovation, as does a trend of “Science through Innovation.” If technology that embodies “Innovation through Science” in this way can be created, sustained virtuous cycles of innovation and science will also be freely created.

HRI-JP Next Generation of Intelligent Communication and Social Interaction Research Alliance Laboratory

Robots that search for victims at disaster sites have also been equipped with HARK

Of course, HARK technology is also being used in the robotics field. As a project in the Impulsing Paradigm Change through Disruptive Technologies Program (ImPACT), which was launched by the Cabinet Office in 2014, the Tough Robotics Challenge (TRC) aims to develop robots that are tough and can function in severe disaster sites. As part of this project, HARK was installed in drones and snake-like robots as an extreme audition technology.

During a major disaster, drones are able to approach from the sky and search for victims in situations which would be difficult for humans to enter. When a victim is buried under rubble or other debris, the key to finding them is their cries for help, but the problem with drones is the loud noise produced by the high-speed revolution of their blades. But with HARK, we can identify the cries of victims even amidst the noise.

A feature of snake-like robots, on the other hand, is their capacity to cover entire disaster sites, even under rubble. A similar problem with these robots though is that the noise of their motors interferes with rescue operations, but again, this is resolved with HARK. In addition, our technology has also been used in estimating the attitude, or orientation, of the robots using sound to correct the drift of inertial measurement units (IMUs).

HARK technology is also being used in other HRI-JP projects and universities, and there is ongoing research and development. Going forward, we will continue our activities for HARK to gain further traction in society.

A HARK-equipped drone developed by a TRC team from the Tokyo Institute of Technology, Kumamoto University and Waseda University

| Affiliation | Contact Person |

|---|---|

| Project Leader | ・ITOYAMA, Katsutoshi |

Voice

ITOYAMA, Katsutoshi

"Listening to sounds by robot's own ears", it seems to be a simple task, but it is actually difficult. This is a challenging research field with a wide range of issues such as the development of basic signal processing techniques, the incorporation of latest deep learning technology, and the implementation on robots that operate in real time.